Topic – Convolutional Neural Network Explained | Architecture Explanation in Detail

what is a neural network

Neural Network is a supervised method of machine learning. A neural network is a network or circuit of neurons or an artificial neural network composed of artificial neurons or nodes.

A neural network can extract and predict information from data by training its neurons. But the problem with the Neural Network is that the network is prone to over-fitting due to many parameters within the network to learn.

What about a neural network that can learn complex non-linear relationships but with fewer parameters?

Convolution Neural Network (CNN) comes here to play.

It is another type of neural network that is used to enable machines to visualize things and perform tasks.

Image classification, image recognition, object detection, which are some of the most common areas where CNN’s are used.

In this article Convolutional Neural Network Explained, we will explore its working in-depth!

So, let us dive into the CNNs!

The Convolution Operation

In mathematics, convolution is a mathematical operation on two functions (f and g) that produces a third function ( ) that expresses how the shape of one is modified by the other.

) that expresses how the shape of one is modified by the other.

The term convolution refers to both the result function and to the process of computing it.

Since CNN trains itself with image data, it is very much important to scale down images and modify the shape of images and re-estimate the pixel value in an image using an identity kernel matrix.

In the convolution operation, we have a set of inputs, and we calculate the value of the current input based on all its previous inputs and their weights.

A 2-D convolution operation, looks like this-

1-D and 2-D convolution operations implementation is as follows-

Conv1D class

tf.keras.layers.Conv1D(

filters,

kernel_size,

strides=1,

padding="valid",

data_format="channels_last",

dilation_rate=1,

groups=1,

activation=None,

use_bias=True,

kernel_initializer="glorot_uniform",

bias_initializer="zeros",

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None,

**kwargs

)Conv2D class

tf.keras.layers.Conv2D(

filters,

kernel_size,

strides=(1, 1),

padding="valid",

data_format=None,

dilation_rate=(1, 1),

groups=1,

activation=None,

use_bias=True,

kernel_initializer="glorot_uniform",

bias_initializer="zeros",

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None,

**kwargs

)The Output Dimensions

How do we compute the dimensions of the output after the convolution operation?

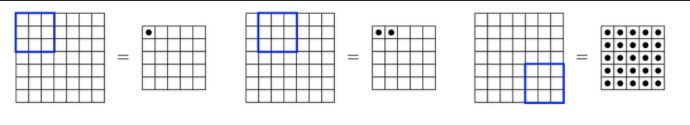

Consider that we have 2D input of size 7×7 and we apply a filter of 3×3 on the image starting from the top left corner of the image.

As we slide the kernel over the image from left to right and top to bottom it’s very clear that the output is smaller than the input i.e. 5×5.

But, why is the output image so small?

For every pixel in the input, we are not computing the weighted average and re-estimating the pixel value.

This is true for all the shaded pixels present in the image (at least with a 3×3 kernel), hence the size of the output is reduced.

This operation is known as padding.

But, this has a drawback.

Every time we will get an output with a lesser dimension than input, we will lose some valuable data which is very important!

What if we can get the output of the same size as the input?

The size of the original input was 7×7, and we also want the output size to be 7×7. So in that case what we can do is that we can add an artificial pad evenly around the input with zeros.

Now we would be able to place the kernel K (3×3) on the corner pixels and compute the weighted average of neighbors.

By adding this artificial padding around the input we are able to maintain the shape of the output as same as the input!

Arriving at Convolutional Neural Networks

The layers used in Convolutional Neural Networks are called:

- Convolutional layer

- Pooling layer

- Fully connected layer

The Convolutional layer

The convolution layer uses filters that perform convolution operations, as discussed earlier in detail.

Note that, not all the information present in the image is important for image classification. The convolutional layer is enough smart to handle this!

The output image is called the feature map. It has all the features computed using the input layers and filters.

We already know that convolution layers can be 1D and 2D. By now, the 3D layer is also designed!

You can find more about these layers in Keras tutorials, which are undoubtedly the best!

The Pooling Layer

Suppose we have an input image with length, width, and depth of 3 channels.

As discussed earlier, when we apply a filter of the same depth to the input we will get a 2D output also known as a feature map of the input.

Generally we perform an operation called pooling after getting the feature map.

What does pooling do

Pooling operation reduces the input feature representation thereby reducing the computational power of the cnn!

In this layer, we apply a filter of specific shape along the feature map to get the maximum value (argmax) from that portion of the feature map.

Fully Connected Layer

The output of the pooling layer is flattened to a vector to pass through the Fully Connected layers.

This is the brute force layer of a CNN! All the inputs from one layer are connected to every activation unit of the next layer in the fully-connected layer.

Finally, the output of the Fully Connected layers is passed through a Softmax activation function. Softmax outputs a vector of probability distributions which helps to perform the task of image classification.

The fully connected layers compiles the data extracted by previous layers to form the final output.

YAY!

We cracked open a huge artificial neural network with our own neural networks!

Conclusion

Hope you had fun learning about Convolutional Neural Network Explained! The CNN-s have now arrived with much more variations and smarter architectures like VGGNet, AlexNet, ResNet, etc.!

Reach out the Keras Blog to learn more!

You can check out our other projects with source code below-

- Spam Email Detection using Machine Learning Projects for Beginners in Python

- Hands-on Exploratory data analysis python Code & Steps

- Interesting python project Mouse control with hand gestures.

- Best Python Project with Source Code

- Live Color Detector (#1 ) in Python

- List of 9 Best Statistics Book for Data Science India